Increased web access to powerful computing networks, along with federal funding and the declining cost of parts for supercomputers, have made the technology more available to college students and university researchers.

Once the domain of elite universities that regularly roped in millions of dollars in funding for scientific research, supercomputers have become more readily available in higher education and through competitive programs that aid the most worthy projects that require supercomputing capabilities.

Cloud computing—accessing virtual warehouses of information and calculation tools via the internet—has played a major role in the democratization of supercomputing, experts said.

Students, faculty, and researchers now can connect to powerful servers through their own PC, instead of having to trek to a distant machine in a university or government lab.

Amazon Web Services (AWS), Amazon.com Inc.’s cloud-computing service, recently gave Harvard University students access to the company’s global computer infrastructure, allowing the students to complete projects and assignments that might have crashed their laptops and PCs.

“It makes possible resources that universities might not be able to provide for students,” said David Malan, a Harvard computer science professor whose class used the high-powered web resources. Amazon gave Malan’s students about $100 in server usage apiece.

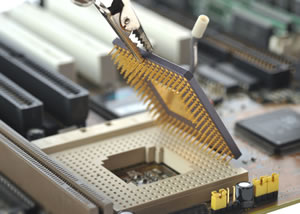

The falling price of parts needed to build a high-performing machine is another factor that has made supercomputers more widely available to researchers.

High-performance computers, which cost about $5 million to make in the 1980s, dropped to about $1 million in the 1990s and have fallen precipitously in the 2000s, now costing about $100,000, according to research from Scientific Computing, a website that tracks supercomputing trends.

Higher education’s supercomputing experts said quickly evolving technology might bring down the price of supercomputer parts, but the availability of new equipment often changes the very definition of what a high-performance machine is.

“When supercomputing equipment prices … more affordable, it is almost always true that it is no longer seen as supercomputing, and that other computers have greatly surpassed it in capability,” said Jim Ferguson, director of education, outreach, and training for the National Institute of Computational Sciences at the University of Tennessee, home to one of the world’s premiere supercomputers.

Ferguson said “anyone who has a cheap laptop computer today” has a more powerful machine than the supercomputer he first worked on when he entered his field in 1987.

Making supercomputers more available for colleges and universities has largely depended on federal funding, especially to high-performance computers listed on the Top 500, a list of the world’s most powerful supercomputers that includes machines from the University of Tennessee and the University of Colorado, among other U.S. campuses.

There were 43 universities in the Top 500 list when it was first published in 1993. As of 2009, there were more than 100 U.S. universities on the prestigious list.

The National Science Foundation’s TeraGrid program, which allocates more than 1 billion processor hours to researchers annually, helps support advanced research projects—many from higher education—that, for example, predict earthquakes and detect tumors with unprecedented accuracy.

And research from a group of academics published in the Journal of Information Technology Impact shows a strong correlation between consistent funding of supercomputers and research competitiveness, meaning that colleges and universities that apply for and receive federal supercomputing grants often receive more research proposals than their peers.

TeraGrid last year made available 200 million processor hours and nearly 1 petabyte of data storage to 100 research teams worldwide. A petabyte is a unit of computer storage equal to 1 quadrillion bytes, or 1,000 terabytes. A terabyte is equal to 1,000 gigabytes.

TeraGrid resources include more than a petaflop of computing capability, more than 30 petabytes of online and archival data storage, and access to 100 academic databases.

For some sense of scale, a petaflop consists of a thousand trillion floating point computer operations per second and is a measure of computer performance.

Shrinking the often-bulky supercomputer has helped one campus make room for more high-performance machines.

Purdue University, anticipating increased faculty demand for supercomputing access, has moved its supercomputing cluster—called Steele—to a portable computer center containing more than 7,200 processing cores.

One of the first universities to use Hewlett-Packard’s Performance-Optimized Data Center (POD), Purdue says its use of the modular supercomputer will make space for two or three new supercomputers at the campus’s Rosen Center for Advanced Computing—a move that will help Purdue officials meet the growing faculty and researcher demand for supercomputing resources.

“This gives us an expansion capability we have not had,” said Lon Ahlen, facilities manager for Information Technology at Purdue (ITaP), the school’s main technology organization.

The POD technology has helped Purdue technology decision makers avoid reconfiguring buildings to fit new supercomputing resources—a costly adjustment. It requires only a concrete pad for foundation and a connection to the campus’s data and power lines, according to a university announcement.

“With the POD, we’ll have deployed an entire new data center in a matter of months at a fraction of the cost of a traditional data center, while being able to support all our current, as well as anticipated … faculty demand,” said John Campbell, associate vice president for academic technologies at Purdue.

The relatively pocket-sized supercomputing cluster will aid faculty members from a range of departments, including aeronautics, agronomy, climate science, communications, medicinal chemistry, molecular pharmacology, biology, engineering, physics, and statistics.

On some campuses, the sharing of supercomputing resources has expanded beyond faculty and students.

Brown University in Providence, R.I., in partnership with IBM, announced in November the opening of a new supercomputer in Brown’s Center for Computation and Visualization that will be made available to businesses, hospitals, nonprofit organizations, and other universities across the state.

Jan Hesthaven, professor of applied mathematics and director of Brown’s Center for Computation and Visualization, said providing access to the university’s new supercomputer—which can perform 14 trillion calculations per second—will be a valuable public service for Rhode Islanders researching ways to tackle climate change and improve education and health services.

“We live in an era where computer-enabled research cuts across all research and opens entirely new pursuits and innovations,” she said. “However, this work demands greater computational capacity in terms of speed and the ability to handle large amounts of data.”

Brown’s new supercomputer will be 50 times more powerful than any other machine available on the campus, according to the school’s website. The machine also will have about 70 times the memory storage than Brown’s next most powerful computer, and it will be six times more energy efficient than other high-powered machines on campus.

“We wanted to encourage new forms of collaborative research via the computing platform – a platform that researchers and students at smaller institutions would otherwise have difficulties accessing otherwise,” Hesthaven said.

- Research: Social media has negative impact on academic performance - April 2, 2020

- Number 1: Social media has negative impact on academic performance - December 31, 2014

- 6 reasons campus networks must change - September 30, 2014